AI

Nvidia unleashes H200 GPU to empower Generative AI used by Grok, ChatGPT, Bard and more

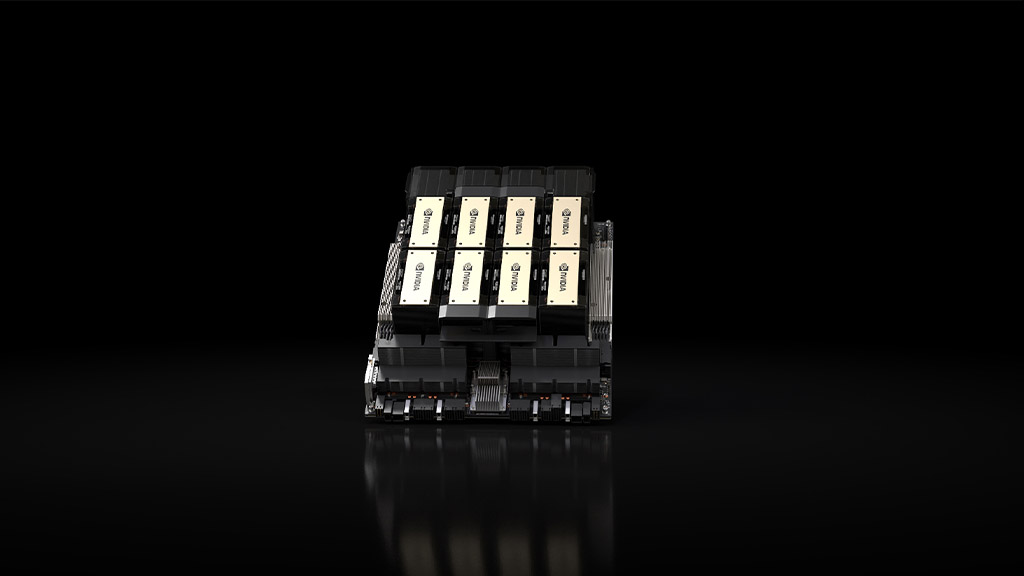

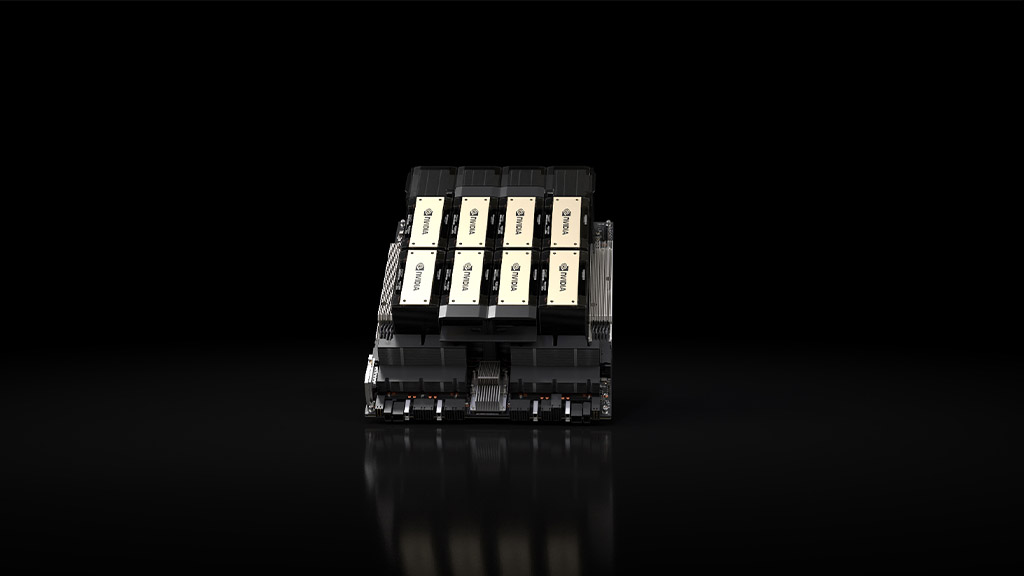

Nvidia has announced Nvidia HGX H200 GPUT for AI computing for Generative AI applications and large language models such as xAI’s Grok, Google’s Bard, and OpenAI’s ChatGPT.

According to Nvidia, the new HGX H200 AI GPU is based on Hopper architecture and features H200 Tensor core GPUT with the capability of advanced memory to handle massive amounts of data for generative AI and high-performance computing workloads.

Nvidia says this new GPU is a perfect companion for the AI industry with its new HBM3e, this new GPU can deliver 141GB of memory at 4.8 terabytes per second. This new performance is nearly double the speed of the Nvidia A100 GPU.

The old Nvidia H100 chips cost between $25,000 and $40,000 according to an estimate from Raymond James and thousands of them working together are needed to create the biggest models in a process called “training”.

The new H200 could provide double the inference speed on Llama 2, a 70 billion-parameters LLM as compared to H100. The new H200 will be available in HGX H200 server boards with four and eight-way configurations. These are compatible with the Hardware and software of the HGX H100 system. Therefore, customers of this new chip won’t have to upgrade the existing systems or spend extra to reach compatibility.

Nvidia announced to deployment of the new H200 chip in every type of data center including premises, cloud, hybrid-cloud, and edge computing. The new Nvidia H200 AI chip will be available for global AI industry players and cloud service providers in the second quarter of 2023.