xAI

Grok 2 mini gets a speed upgrade a week after beta launch

AI company xAI has announced that its Grok 2 mini model has received a speed upgrade with recent changes in the program code.

Last week, xAI revealed two new models – Grok 2 and Grok 2 mini. These are large and mini-size language models with improved performance compared to Grok 1.5.

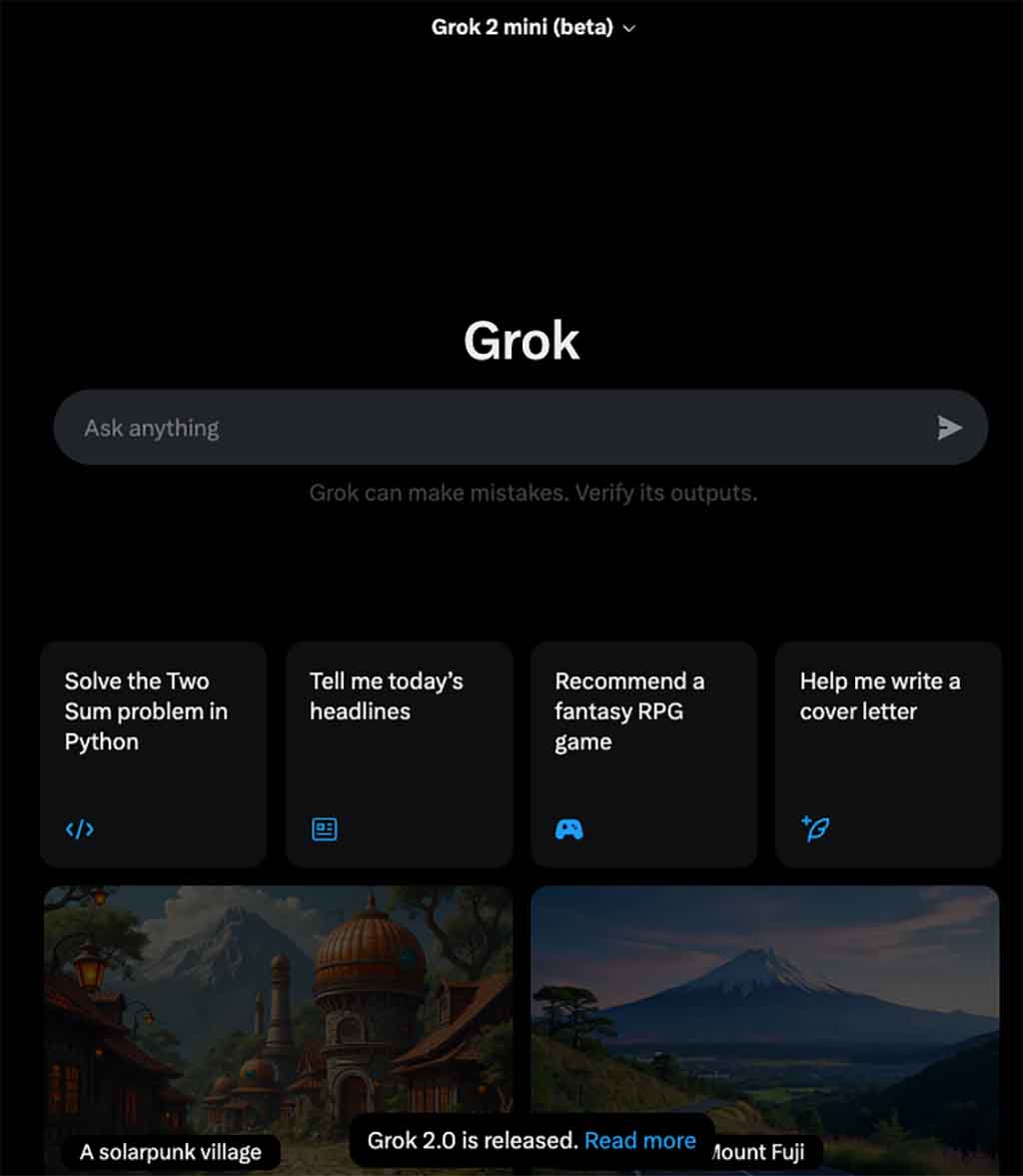

These models can generate images based on user prompts, which is a new feature. The company has partnered with Black Forest Lab to use FLUX.1 model to bring generative image capability.

Grok 2 and 2 mini will also deliver tailored performance in each segment. The large model however has more power for tasks such as coding and writing content.

These models are available on the social media site X (formerly Twitter) with a paid subscription. Besides new performance, X users will also experience a better user interface. Interestingly, both models are launched in beta access and the xAI team is making new optimizations to polish the user experience.

Grok 2 UI (Source – X)

Over the past week, it has improved the inference stack. These improvements are supported by custom algorithms for computation, and communication kernels as well as efficient batch scheduling and quantization.

In simple words, these techniques represent advances in LLM research to address the challenges of computational efficiency, memory consumption, and accuracy. Therefore, the model can provide efficient, accurate, and accessible results to the end user. Meanwhile, the team expects more upgrades to Grok 2 as the beta test progresses.

You can access Grok 2 or 2 mini models from the left side menu on the X web or the bottom bar on mobile.

Grok 2 mini is now 2x faster than it was yesterday. In the last three days @lm_zheng and @MalekiSaeed rewrote our inference stack from scratch using SGLang (https://t.co/M1M8BlXosH). This has also allowed us to serve the big Grok 2 model, which requires multi-host inference, at a… pic.twitter.com/G9iXTV8o0z

— ibab (@ibab) August 23, 2024

(Source)