Apple

Apple brings image editing AI model ‘MGIE’ and it’s quite interesting

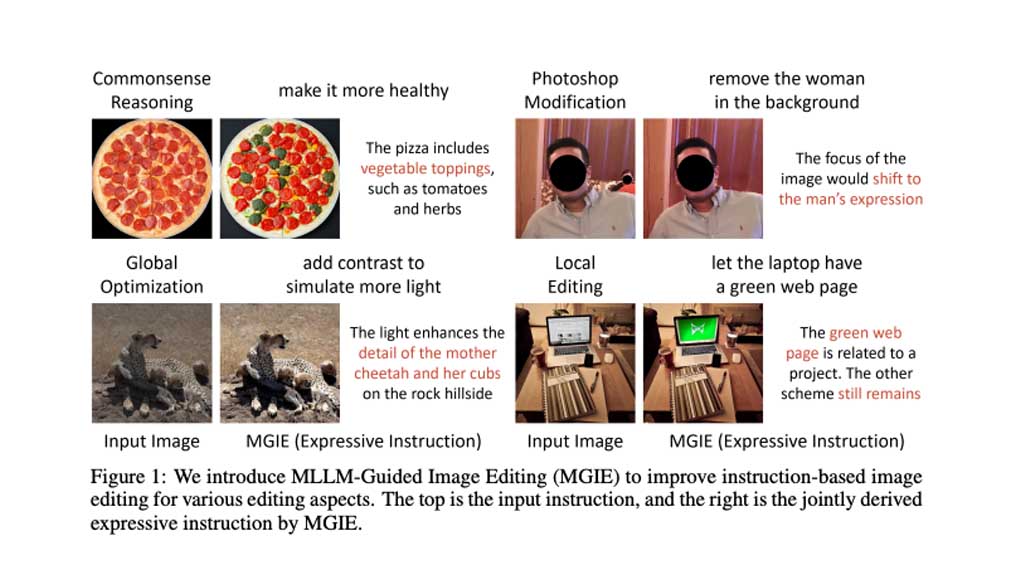

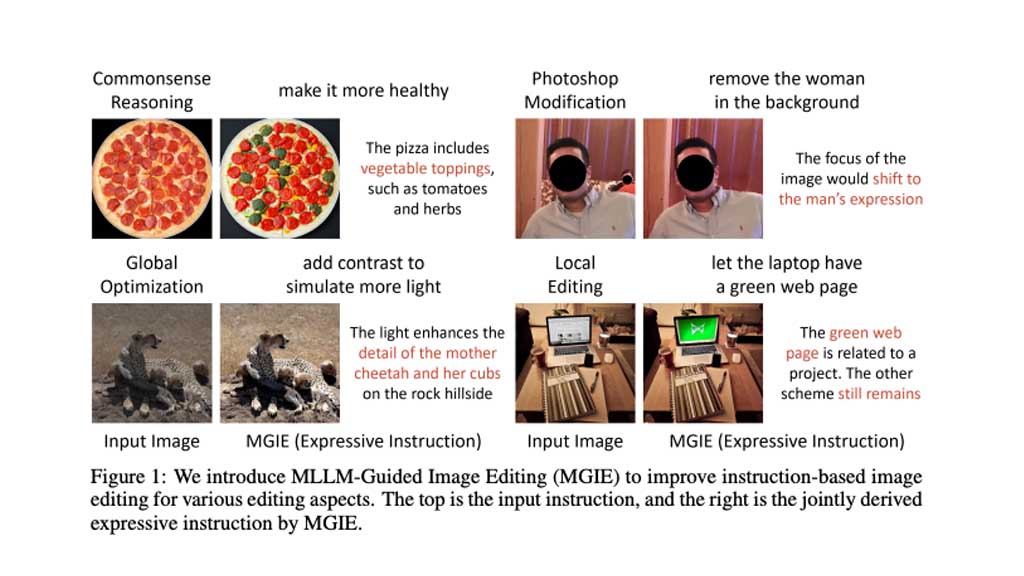

Apple has recently released MGIE, an open-source AI model that can edit images. MGIE uses multimodal large language models (MLLMs) to execute instructions and edit images.

Apple has developed this AI model in collaboration with the University of California, Santa Barbara. Its paper was published at the International Conference on Learning Representations (ICLR) 2024.

The Cupertino-based tech firm also explained how MGIE performs its key functionalities.

MGIE is leveraging MLLMs’ capabilities to process both text and images for prompt-based editing. So far, there is no major generative AI image model released in the industry.

MLLM is a large language model that can process and understand information from multiple types of data including text, images, videos, and audio. This multi-dimensional approach allows the MLLM to execute complex tasks compared to LLMs primarily used for text-based processes.

According to Venturebeat, MGIE pursues two steps to achieve AI image editing. First, it takes the user prompt and processes it via MLLM to achieve an editing route, in simple words, a way to edit the image.

Users can ask the model to make the “sky bluer” in an image, and MGIE will make suitable adjustments in the sky part of the image where the user requires more blue properties.

The second step is to walk on the editing route using the MLLMs to make visual changes via pixel-level manipulation technology. Based on the information, MGIE can handle different types of editing instructions from color adjustments to more complicated tasks.

For now, Apple has not announced any product with the MGIE AI model but there’s a high possibility that the iPhone maker will use this research in future products.

Other than Apple, such Modal can be a big deal for image-based generative AI applications. You can check the example edits of the MGIE system below.

Apple MGIE AI Model editing images based on user prompts (credit – Venturebeat)