News

xAI Grok-1 AI engine explained in detail

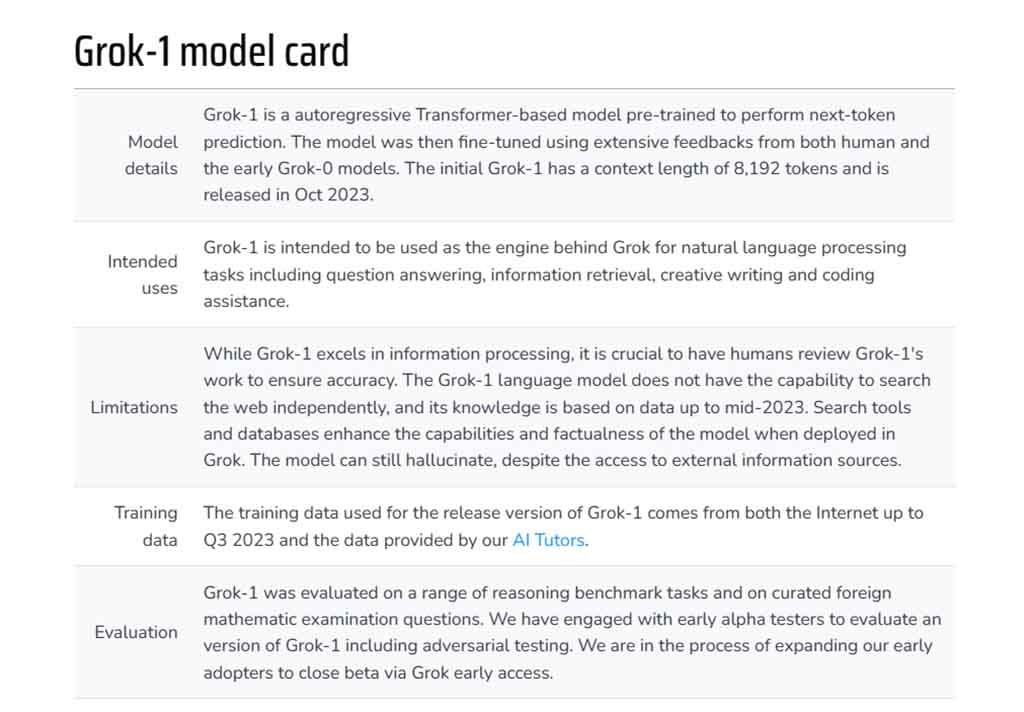

On November 4th, xAI announced Grok, which is based on the Grok-1 AI using large language model (LLM) technology. It was designed and developed by xAI, an AI research firm founded by Elon Musk in July this year.

In technical terms, Grok-1 is an autoregressive Transformer-based model pre-trained to perform next-token prediction. The model uses feedback from both human and machine models. The Grok-1 has a context length of 8,192 tokes.

The training data for Grok-1 comes from the internet until the third quarter of 2023 and there is also data provided by AI tutors.

(source – xAi)

Background:

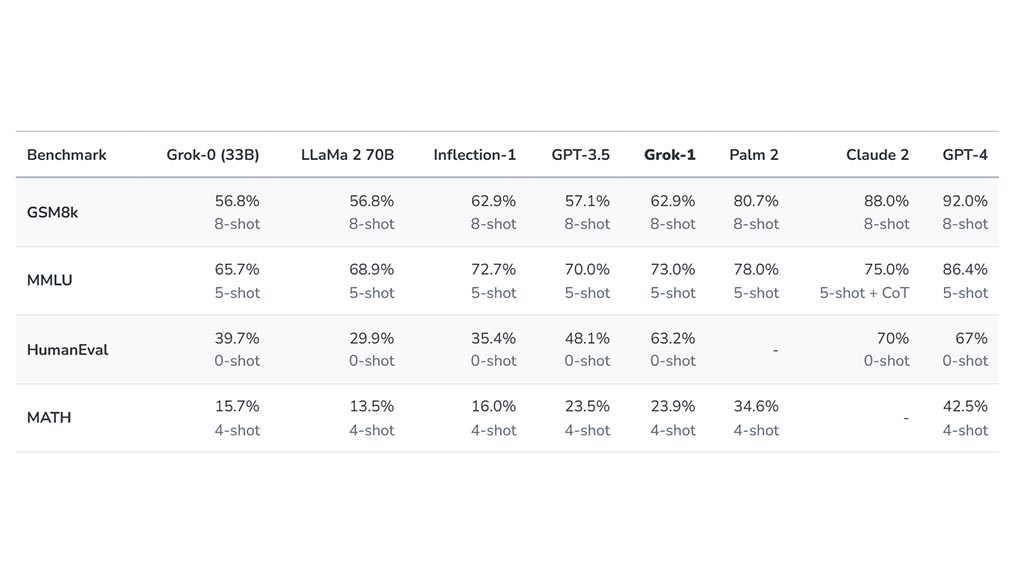

In July, xAI started training Grok-0 as a prototype with 33 billion parameters. This early model approaches LLaMA 2 (70B) capabilities on standard Large Model benchmarks and uses half of its training resources.

With the following two months of training, the prototype model reached a new level and led to Grok-1, a new language model achieving, a 63.2% benchmark on the HumanEval coding task and 73% on MMLU GPT-4 test.

Evaluation:

During the training, Grok-1 used several evaluations using a few standard machine learning processes to measure the math and reasoning capabilities of the Grok AI including the following:

- GSM8k: Middle school math word problems, (Cobbe et al. 2021), using the chain-of-thought prompt.

- MMLU: Multidisciplinary multiple choice questions, (Hendrycks et al. 2021), provided 5-shot in-context examples.

- HumanEval: Python code completion task, (Chen et al. 2021), zero-shot evaluated for pass@1.

- MATH: Middle school and high school mathematics problems written in LaTeX, (Hendrycks et al. 2021), prompted with a fixed 4-shot prompt.

Below you can check the benchmark results of Grok-1 in all of the calculation standards:

(source – xAI)

The benchmark shows that the Grok-1 system has surpassed other competitors in the compute class including ChatGPT 3.5 and Inflection-1. Therefore, ChatGPT 4 still reins supreme over Grok-1 due to the amount of training data.

School Test:

With that being said, xAI also tested the Grok-1 model on the 2023 Hungarian national high school finals in mathematics from May this year and compared the results with Claude-2 and GPT-4.

xAI shared that Grok passed the exam with a C (59%), Claude-2 also got C (55%) and GPT-4 got a B (68%). All of the models operated at a temperature of 0.1 and the same prompt. Aside from these, xAI has announced Grok’s early access beta program and you can check all of its information here.